Banking Challenges on Cash Management

Custom Made solutions

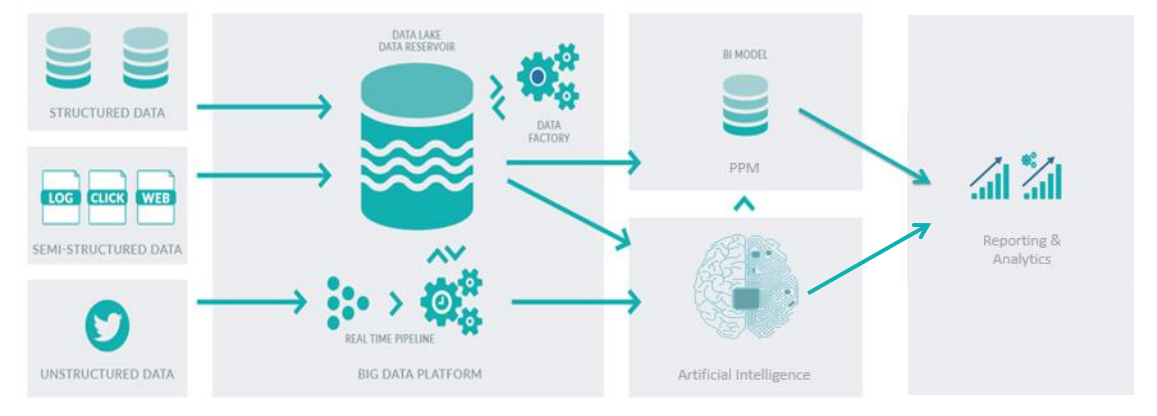

Traditional approach

:

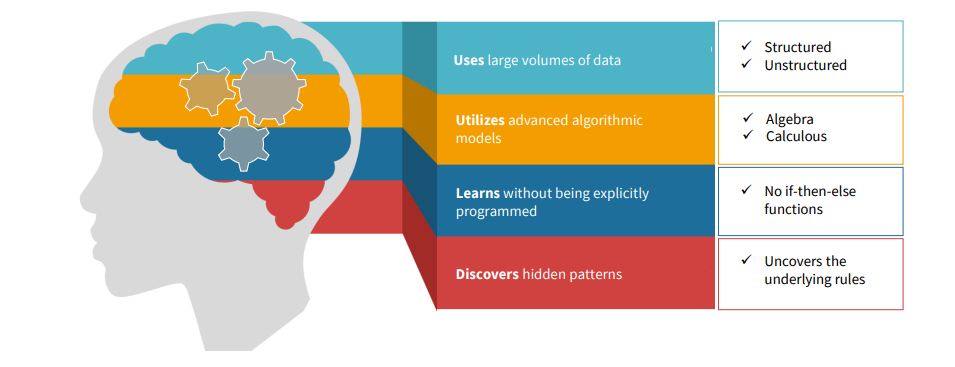

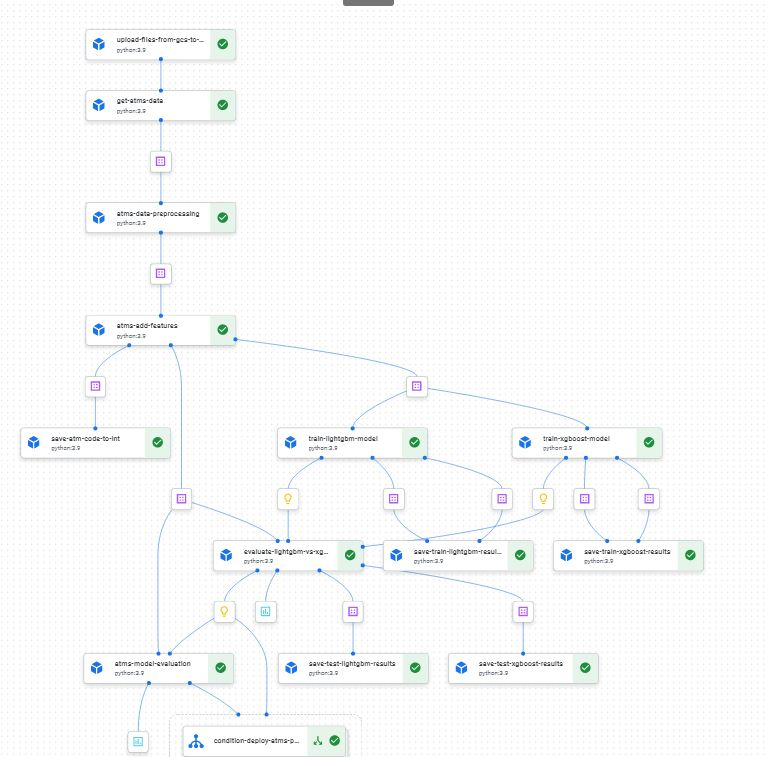

✓ Outlier Detection

✓ Handling Missing Values

✓ Normalisation

✓ Feature Engineering

✓ Feature Selection

Options

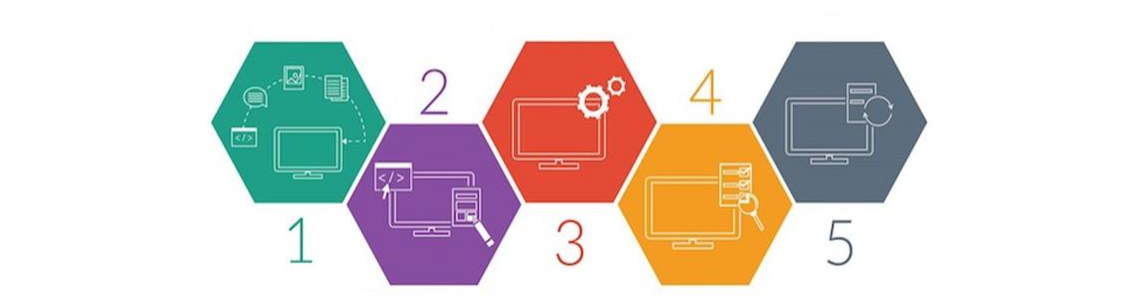

VertexAI provides an easy-to-use interface for loading and preprocessing data, which can be used to load transaction data and other relevant data such as weather information and economic indicators.

Read More

VertexAI includes a number of pre-built ML models that can be used to train models on the data. It also allows users to create their own custom models using the drag-and-drop interface.

Read More

VertexAI allows for easy deployment of trained models. This means that once a model is trained, it can be deployed in a production environment and used to make predictions in real-time

Read MoreVertexAI provides monitoring tools that allow users to track the performance of the deployed models over time. This helps to ensure that the models continue to make accurate predictions

Read More

Evaluate the performance of the current model and improve as needed

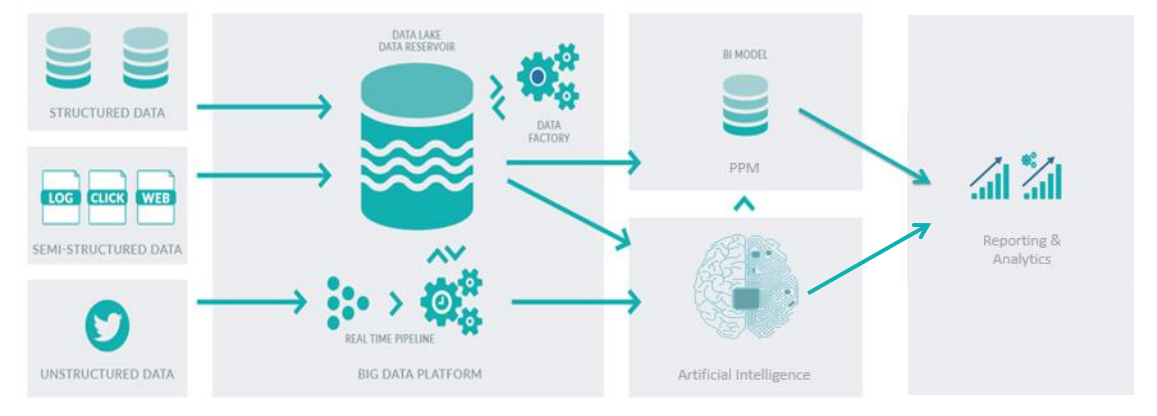

Determine ICT track routing to curtail costs further

Scale to the entire ATM network / Include the entire branch cash demand

Accurately predict the cash demand per ATM

Our solution:

Roadmap